aqua is a declarative CLI version manager written in Go. It works as a single binary.

The post aqua – declarative CLI version manager appeared first on LinuxLinks.

/// 14 Dec 2025, 1:21 am ////// Reddit ///

If your Linux system suspends, then immediately re‑suspends after resume, you’re not alone.

This is a confirmed upstream regression introduced in systemd v258.

Symptom: after resume, logind re‑triggers suspend almost immediately (double suspend loop).

Confirmed by bisect: last good release was v257, regression introduced at v258.

Upstream bug report: https://github.com/systemd/systemd/issues/40078

Workaround: downgrade to systemd 257.x until the fix lands (expected in v259).

Posting here so people don’t waste time chasing distro‑specific fixes — it’s upstream.

[link] [comments]

/// 14 Dec 2025, 1:34 am ////// Slashdot ///

Read more of this story at Slashdot.

/// 14 Dec 2025, 1:32 am ////// Phoronix ///

/// 13 Dec 2025, 12:33 pm ////// The Hacker News ///

/// 13 Dec 2025, 2:41 am ////// 9to5Linux ///

Grml 2025.12 GNU/Linux distribution is now available for download based on Debian Testing and powered by Linux kernel 6.17. Here’s what’s new!

The post Grml 2025.12 Linux Distro Is Out Based on Debian Forky and Linux Kernel 6.17 appeared first on 9to5Linux - do not reproduce this article without permission. This RSS feed is intended for readers, not scrapers.

/// 12 Dec 2025, 7:25 am ////// Tecmint ///

Modern Linux development has moved beyond the traditional approach of installing everything directly on your system. You now have access

The post How to Use Fedora Toolbx for Isolated Development Environments first appeared on Tecmint: Linux Howtos, Tutorials & Guides./// 12 Dec 2025, 3:13 pm ////// GamingOnLinux ///

.

.

Read the full article on GamingOnLinux.

/// 12 Dec 2025, 12:25 am ////// FSF Blog ///

/// 13 Dec 2025, 3:57 am ////// ITS FOSS ///

NTFSPlus is a fresh implementation of the classic in-kernel ntfs driver. Can it end the current NTFS woes for Linux users?

/// 12 Dec 2025, 8:00 am ////// Fedora Magazine ///

This article series takes a closer look at interesting projects that recently landed in Copr.

Copr is a build-system for anyone in the Fedora community. It hosts thousands of projects with a wide variety of purposes, targeting diverse groups of users. Some of them should never be installed by anyone, some are already transitioning into the official Fedora repositories, and others fall somewhere in between. Copr allows you to install third-party software not found in the standard Fedora repositories, try nightly versions of your dependencies, use patched builds of your favourite tools to support some non-standard use-cases, and experiment freely.

If you don’t know how to enable a repository or if you are concerned about whether is it safe to use Copr, please consult the project documentation.

Vicinae

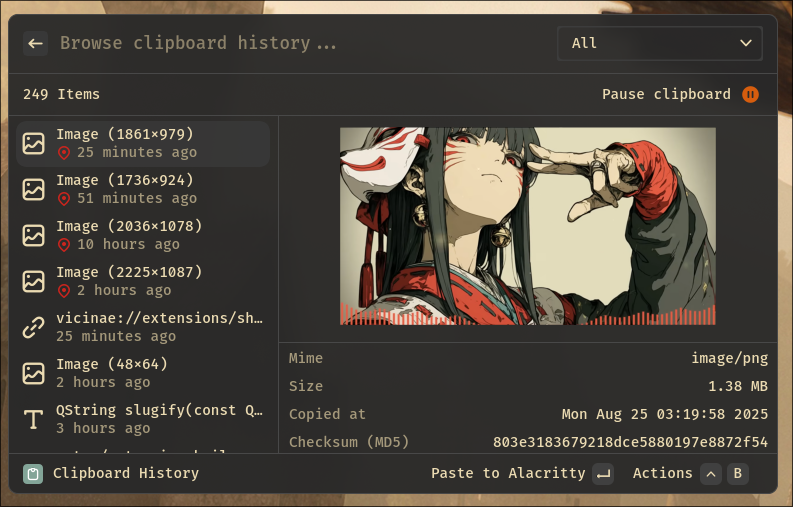

Vicinae is a fast application launcher written in C++/QT. Inspired by tool Raycast, it provides instant app and file search and clipboard history. It also includes built-in utilities such as a calculator and web search, along with support for extensions written in TypeScript. It is designed to be highly responsive and native for Wayland environment. Therefore, if you like keeping your hands on the keyboard or want a customizable, extensible launcher for your desktop, Vicinae may be worth trying.

Installation instructions

The repo currently provides vicinae for Fedora 42, 43, and Fedora Rawhide. To install it, use these commands:

sudo dnf copr enable scottames/vicinae

sudo dnf install vicinae

UZDoom

UZDoom is a modern DOOM source port that builds upon classic GZDoom engine, offering hardware-accelerated rendering, an updated scripting system, improved mod support, and high-quality audio playback. At the same time, it maintains compatibility with classic WAD files while making the experience smooth on current systems.

Whether you are playing the original episodes or diving into extensive mod packs, UZDoom offers a convenient way to enjoy them.

Installation instructions

The repo currently provides uzdoom for Fedora 42, 43, and Fedora Rawhide. To install it, use these commands:

sudo dnf copr enable nalika/uzdoom

sudo dnf install uzdoom

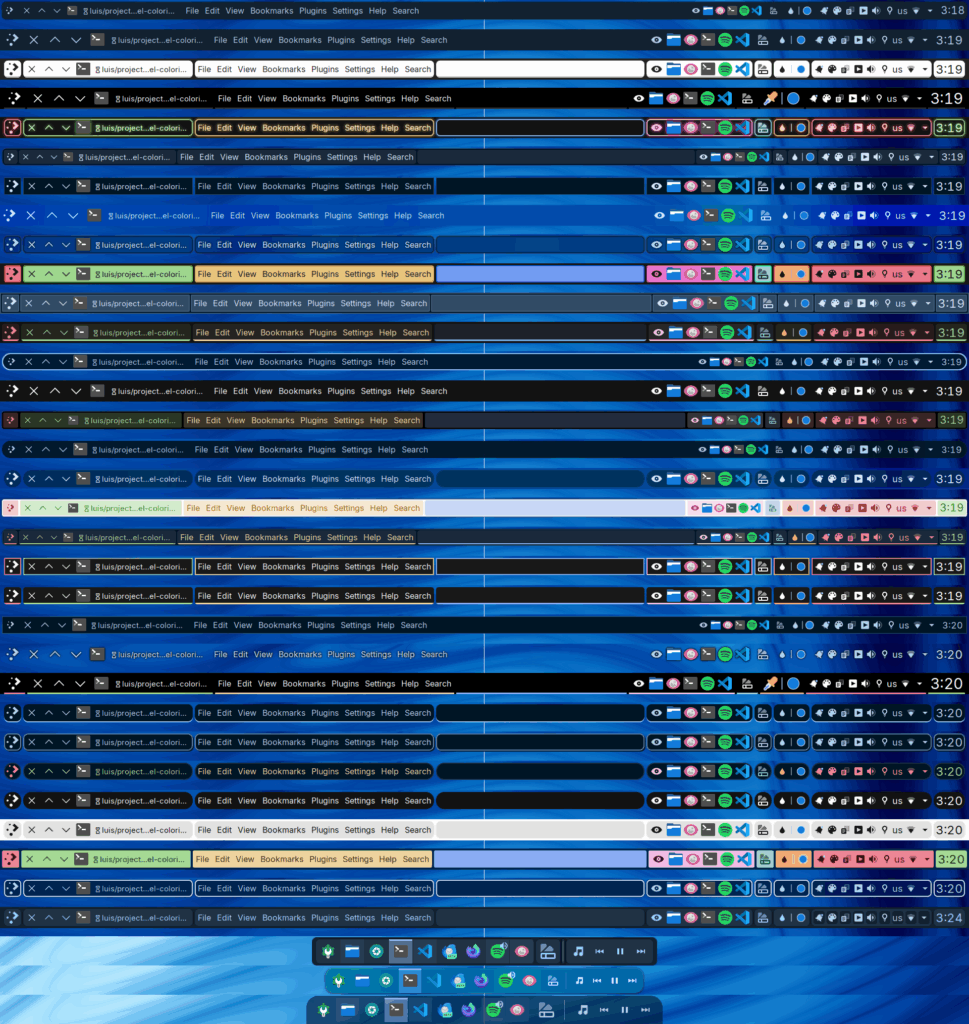

Plasma Panel Colorizer

Plasma Panel Colorizer is a widget for KDE Plasma that allows you to customize the panel’s appearance. In addition, it offers options for background tinting, blur, custom opacity levels, shadows, floating panels, or themes that differ from the stock Plasma look. It also includes full blur support and is updated for Plasma 6, making it easy to adjust your panel exactly the way you want.

Installation instructions

The repo currently provides plasma-panel-colorizer for Fedora 42, 43, and Fedora Rawhide. To install it, use these commands:

sudo dnf copr enable peridot-augustus/plasma-panel-colorizer

sudo dnf install plasma-panel-colorizer

sfizz-ui

Sfizz-ui is the graphical interface for the sfizz sampler engine, which is an open-source player for SFZ instrument libraries. The UI provides an accessible way to load SFZ instruments, adjust parameters, and integrate the sampler into your workflow. It also includes plugin support such as LV2 and VST3, making it suitable for music creation in a Linux DAW environment.

For musicians, sound designers, or anyone using SFZ sample libraries, sfizz-ui offers a polished interface.

Installation instructions

The repo currently provides sfizz-ui for Fedora 41, 42, and 43. To install it, use these commands:

sudo dnf copr enable lexridge/sfizz-ui

sudo dnf install sfizz-ui

MX Moksha and AV Linux 25 join ranks with Bodhi Linux and embrace the Enlightenment desktop.